Composition Forum 30, Fall 2014

http://compositionforum.com/issue/30/

Toward a Revised Assessment Model: Rationales and Strategies for Assessing Students’ Technological Authorship

Abstract: I argue in this article that digital composing practices require composition teachers to rethink the way we articulate learning outcomes and conduct classroom assessment. To accomplish this, we must revise the language we use to talk about outcomes and assessment in the context of new media. We also need to better understand how technologies are changing student compositions, thus driving the need to change our learning outcomes and assessment practices. The purpose of this article is to provide rationales and strategies for doing so, as well as classroom activities that can be used to assess new media compositions.

Introduction

In recent years within Rhetoric and Composition, the subfield of computers and writing has begun to pay closer attention to how writing instructors should assess multimedia compositions in classroom-based contexts. We have not, however, looked closely enough at how our assessment practices can and should respond to the technological skills and literacies students must develop to create such texts. As a result, we need to develop revised terminologies, learning outcomes, and assessment practices that allow us to recognize the production aspects of students’ multimedia compositions. Doing so is important as it helps us become more responsive to student work while encouraging students to use and explore technologies that allow them to create dynamic, rhetorically-savvy texts.

With this in mind, I offer new terminology and approaches our field can use to think about and describe new media productions and how we approach our assessment of these texts. I also provide an overview of current assessment models as well as articulate a revised model for assessment that can help us better understand and assess new media compositions within our classrooms. I conclude by highlighting the core strengths of this model and by sharing several classroom activities that support this revised assessment model.

A Definition

In his work, From Pencils to Pixels: The Stages of Literacy Technologies, Dennis Baron wisely reminds us that “[w]e have a way of getting so used to writing technologies that we come to think of them as natural rather than technological. We assume that pencils are a natural way to write because they are old—or at least because we have come to think of them as being old” (33). Today, we assume that computer interfaces are the only “natural way to write” because they mediate most of our composing practices. Many of us cannot imagine getting through a single day without relying on electronic composing tools like Microsoft Word or email. Consequently, these composing environments, like the pencil, have become normalized. So when we ask students to write with these types of tools, we neither acknowledge the tools they use nor assess their ability to use them.

This response is understandable as standardized technological composing tools like word processors or email do not fundamentally alter the composing process. Many of us approach writing a paragraph with pen and paper in much the same way we compose a paragraph on the screen. New media tools, however, do fundamentally change the way we compose. It is impossible, for example, to replicate the experience of making a video in a Word document or to create web-based graphics in an email like we would in a desktop publishing program. Digital tools, therefore, not only make possible new kinds of compositions, but they also change the ways in which we compose. This means we need new terminology and frameworks that allow us to articulate these changes.

The difficulty with our current approach to new media assessment is that, as Kathleen Yancey points out, we tend to “use the frameworks and processes of one medium to assign value and to interpret work in a different medium” (90). We often see this process play out as educators look to print-based assessment models and language for evaluating digital compositions. These models are inadequate as they do not recognize that multimedia (or what Claire Lauer describes as tools, resources, and materials) make possible new compositions that require technological skills and literacies that go beyond knowing how to type in a word processor. Readers should note that I use the terms “technological skills” and “literacies” interchangeably as the teaching of new media writing in composition courses (as opposed to programming courses, for example) requires us to teach students both technical skills and techno-rhetorical literacies.

Technological authorship is a term that can be of use as we move toward rethinking the relationship between digital composing, learning outcomes, and assessment. Technological authorship, unlike print-based authorship, acknowledges the tools and technological composing processes an author engages in during the creation of a text. Technological authorship asks students to articulate and reflect on the production aspects of their composing processes (i.e. technical skills, knowledge, and competencies) as well as to consider how these processes open up or constrict certain composing acts.

Technological authorship also recognizes that the processes students use to compose digital texts are fundamentally different from how they compose print texts. I learned this first-hand when creating a remediation project for Kristin Arola’s graduate-level Teaching with Technology course at Washington State University in the fall of 2012. For this project, Kristin asked the class to create a digital timeline that explored an aspect of computers and writing scholarship. We were later asked to repurpose or remediate the project into a non-digital format. The processes I went through in creating the digital and non-digital texts were radically different. When creating the digital composition, I focused intently on experimenting with various technological media, on selecting a rhetorically-compelling medium, and on learning basic HTML code and CSS; these activities were highly technical (at least to me), unfamiliar, and they demanded that I develop new technological skills and literacies.

The process of creating the digital timeline (http://loribethdehertogh.com/techassessment) demanded not only technological literacies but also changed the way I approached my composing process. Instead of sitting down to a familiar Word document to draft and revise a text, I viewed online tutorials, learned HTML code with a program called Codeacademy, and tweeted technical questions for help. Later, when I turned to creating the print version of my project (which was a play script), I found the process more intuitive, as it neither required me to learn new technical skills nor fundamentally altered my composing process. What this example demonstrates is that the way an individual composes with new media tools, as well as the products that are produced, are different from how an individual produces a print-based composition; we therefore need terminology that recognizes these changes.

Although the term “technological authorship” is new, others within composition studies have already begun taking on the work it represents. Jennifer Sheppard in her article, The Rhetorical Work of Multimedia Production Practices: It’s More than Just Technical Skill, emphasizes the need for composition teachers to learn to assess both the “design and production choices” of multimedia texts (129). Kathleen Yancey has written extensively on the topic of assessment, arguing that compositionists need to reconsider the “condition for assessment of digital compositions” as well as the types of compositions digital media make possible (93). More recently, Elyse Eidman-Aadahl et al., in their work Developing Domains for Multimodal Writing Assessment: The Language of Evaluation, the Language of Instruction, stress the need for assessment approaches that recognize the “process management and technique” students must use in “planning, creating, and circulating multimodal artifacts” (n.p.), a philosophy that resonates with the values promoted by the CCCC Position Statement on Teaching, Learning, and Assessing Writing in Digital Environments. My purpose in proposing the term “technological authorship” is to add to these and other scholars’ work and to take us toward the next step in thinking about how we understand and practice new media assessment.

Current Assessment Models

Before outlining the strategies compositionists can use to resolve some of the complications associated with new media assessment, it is helpful to begin with an overview of several assessment models and approaches that continue to play an influential role in our field.

The assessment model most familiar to us has grown out of the process-based writing movement. Sondra Perl’s influential work The Composing Processes of Unskilled College Writers made a compelling argument for the importance of recognizing the value of students’ composing processes. Later work by Linda Flowers and John Hayes supported the theory that writing is a goal-oriented, process-based task that requires pedagogical and assessment models that recognize both process and product.

Current frameworks for assessing new media compositions have grown out of these historic movements. They differ, however, in that these new approaches pay special attention to the differences between print and multimedia compositions. Madeleine Sorapure’s webtext, Between Modes: Assessing Student New Media Compositions, for example, offers strategies for thinking about the need to “attend to the differences between digital and print compositions in order to be able to see accurately and respond effectively to the kind of work our students create in new media” (1). Cynthia Selfe likewise argues that as composition teachers we need to rethink how we “value and address new media literacies” (51), while Pamela Takayoshi indicates that the assessment of digital compositions must take into account the various “literacy acts” students engage in (246).

Building on the theories and models put forth by these scholars, Jody Shipka addresses the complex task of assessing multimodal compositions in her book Toward a Composition Made Whole. Here she offers a framework for considering how to respond to “the production and evaluation of dissimilar texts,” or texts that combine print-based materials with multimedia and cross-disciplinary work (112). Shipka argues that one important strategy for effectively assessing these types of student productions is by asking students to self-assess their work (112). Shipka’s attention to the importance of developing self-reflective criteria for new media composing fits into larger conversations in the field of computers and writing with scholars such as Michael Neal, Diane Penrod, Troy Hicks, and Anne Wysocki.

Other, more recent models and approaches have also emerged. Colleen Reilly and Anthony Atkins in their 2013 book chapter, Rewarding Risk: Designing Aspirational Assessment Processes for Digital Writing Projects, argue that new media assessment practices should be “aspirational” as they encourage risk-taking and invite students to engage in thoughtful, deliberative, student-driven composing practices (n.p.). Eidman-Aadahl et al., in their highly collaborative work on developing what they call “domains” for multimodal assessment, outline five domains they believe resolve some of the shortcomings of current assessment models. These include an attention to artifact, context, substance, process management and technique, and habits of mind (n.p.). Such criteria draw our attention to the multi-dimensional aspects of new media productions while offering a framework for thinking about multimedia assessment from a localized and situated perspective.

My notion of technological authorship has grown out of the approaches and assessment practices proposed by these scholars, and I have often turned to their work in rethinking my own assessment methods. I still find, however, that despite our movement toward re-envisioning assessment models in the context of new media, not enough attention has been paid to considering the technological aspects of students’ composing processes. Even Gunther Kress and Theo van Leeuwen, in their influential work Multimodal Discourse: The Modes and Media of Contemporary Communication, largely neglect the need to recognize the production aspects of multimodal work. While they argue that both readers and designers should pay attention to the elements central to multimodal composing (discourse, design, production, and distribution), they do not adequately address the need to consider how the technical skills behind these elements play a central role in the composing process.

The limitations of the models and approaches outlined here become most visible when looking at classroom assessment practices. Despite the fact that students who create new media texts engage in technical production, we often focus our assessment energies exclusively on the content students produce, whether it is written text, images, audio, or video. Consequently, we typically offer neither credit nor commentary that connects students’ use of multimedia tools to their revision processes, a practice I have seen play out in my own classrooms. In the summer of 2011, for instance, I designed and taught my first Digital Writing Across the Curriculum course, where I asked students to create a multimedia rhetorical analysis essay. Although I commented on the rhetorical aspects of students’ work (i.e. design choices, tone, audience, etc.), I did not respond to the production aspects of their projects (i.e. technical skills, knowledge, or competencies), nor did I ask students to self-reflect on their technological authoring processes.

The biggest drawback of this practice is that I was unable to see the “invisible” moments of invention and revision that would have given me insight into students’ composing processes. Such an understanding could have helped me determine which students may have needed additional guidance or encouragement. It also could have helped me generate commentary that drew students’ attention to the connections between invention, production, revision, and product. Just as importantly, my lack of attention to students’ technological authorship created an environment where students were less likely to tackle new or complicated tools that may have led to more nuanced, rhetorically-savvy texts.

A Revised Model for Assessment

I have titled this section a “revised” (rather than new) model for assessment as I’m more interested in encouraging our field to rethink what we do and do not value in our current process-based assessment methods rather than in capsizing current assessment models. Moreover, I should add that I do not see this revised model as a panacea to the challenges we face in our classrooms in determining how to assess digital work. Rather, this model gives us a refocused lens for considering how the technological aspects of new media compositions demand that we revise our classroom-based assessment practices.

Before I propose this revised model of assessment, I should clarify what I mean by the word “assessment.” Readers will notice when I talk about assessment in the context of this new model that I frequently use words like “acknowledge,” “value,” and “credential.” These terms reflect a focus more on evaluation than on ranking or grading, which better supports revision-based, reflective composing practices. Carole Bencich articulates the difference between evaluation and grading this way:

Thus, evaluation points to the process of determining worth. Etymologically, it is linked to value, from valere, to be strong. Over the years, value has been associated with the idea of intrinsic worth. When we value something, we hold it in esteem. We do not necessarily rank it according to a formal rubric. A grade is a product, expressed in a number or letter whose meaning is determined by its place in a hierarchy. Evaluation, on the other hand, is often expressed in a narrative which represents the standards and values of the evaluator. Evaluation need not result in a grade. It can be ongoing, with opportunities for revision built in to the process. (50-51)

While the model I propose here can be adapted to either a valuing or grading approach, I like to think it is most productive when used as a lens for responding to students’ technological authorship. A benefit of our increased responsiveness to the production aspects of student work is that we invariably encourage them to produce better overall products—a process that often leads to higher grades.

In the following subsections, I articulate the learning outcomes and assessment practices that underwrite this revised assessment model. These strategies begin to move us toward more effectively acknowledging students’ technological authorship.

Revising Learning Outcomes

Because digital tools make possible new kinds of compositions as well as change the ways in which students compose, we need to develop revised learning outcomes. Moreover, these new outcomes should reflect students’ need to develop technological skills and literacies (basic coding, graphic design skills, analytical skills, etc.) in order to create digital compositions.

What these new learning outcomes look like will vary from classroom to classroom. But one common trait they should all share is a dedication to valuing students’ technological authorship. Recognizing these values does not move us away from the current goals and outcomes we already value in writing classrooms or our focus on the rhetorical aspects of compositions, nor does it threaten to reduce the assessment process into an obsession with technical skill. It instead asks us to see technological composing processes from a more comprehensive point-of-view. Jennifer Sheppard articulates the value in such a perspective, arguing that:

Oftentimes, however, teaching multimedia production is viewed by those outside of the field as simply a matter of imparting technical skill rather than facilitating development of diverse and significant literacies. A closer look at these practices reveals how the complex choices made during production regarding audience, content, technology, and media can dramatically affect the final text and its reception by users. Rather than viewing multimodal production work as just technical skill, I argue that it requires careful attention to both traditional and technological rhetorical considerations. (122)

As Sheppard illustrates, multimedia composing is not just about technical skill nor does it put us in danger of reducing either our pedagogical or assessment practices into a focus on technical skill alone. But what it does mean is that we need to formulate learning outcomes that recognize these skills.

One approach to developing such learning outcomes is a performance-based evaluation system, or what Stephen Adkison and Stephen Tchudi call “achievement grading” (201). This approach asks students to perform specific tasks or to master learning outcomes such as writing an essay or learning how to use a new tool. Students are then assessed (often based on a pass/fail or credit/no credit system) according to their ability to complete the task. Adkison and Tchudi argue that the major benefit of this approach is students’ ability to “decide for themselves how they will accomplish these tasks, what they want to achieve, and how to go about getting the work done. The ambiguities they must resolve are not between themselves and a teacher’s vague expectations, but between themselves and a task they must achieve” (200). What I like most about Adkison and Tchudi’s method is that it allows educators to create learning outcomes that encourage students to develop technological authoring skills, but the way in which students learn these skills is up to them. This is especially useful if a teacher has given her students a choice as to what kinds of technological tools they can use in performing a task or creating a text. Moreover, this approach frees teachers from worrying about whether or not they should outright grade students’ technical skills.

A more comprehensive approach to designing appropriate learning outcomes that reflect students’ technological authorship is what Grant Wiggins and Jay McTighe call “backward design.” This model asks teachers to start course planning with their desired learning outcomes and to then work backward to create lesson plans, instructional materials, and assessment practices. The model consists of three stages:

- Identify desired results

- Determine acceptable evidence

- Plan learning experiences and instruction

In the first stage, educators should consider their classroom goals, best practices, and curriculum expectations (9). In the second stage, a teacher must begin to “think like an assessor” and determine whether or not students have achieved desired learning outcomes (12). In the final stage, educators determine instructional activities that align with the outcomes produced by the first two stages (13).

Wiggins and McTighe’s backward design model gives us a simple yet elegant framework for thinking about how to articulate learning outcomes that recognize students’ technological authorship. As this model illustrates, however, these outcomes must be present in the initial planning stages of a course or assignment. Taking this step is key, as all too often we find ourselves formulating assessment practices for digital compositions in retrospect; it is only after we have assigned and collected students’ projects that we discover, as one anonymous reviewer of this article put it, “what we were really asking them to do.” Wiggins and McTighe’s model pushes us to determine ahead of time the technological composing skills we value and to develop goals and outcomes that help students meet those expectations.

Lastly, teachers must spend time identifying and articulating what they want to teach (Angelo and Cross 8). This is a common-sense statement, but it’s easy to get so caught in up designing multimedia projects that we forget why we assigned them in the first place. Most often, we assign them because we are trying to respond to ever-evolving social and educational notions of “composition” or because we want to try something new. But we must remember that when we assign digital projects, we are asking students to develop technological skills and literacies that often go beyond the familiar Word document or Power-Point presentation. This means our learning outcomes should reflect not only the fact that we value technological literacies, but also the processes students undergo in learning them.

The methods and approaches I propose here give us a framework for recognizing that new media tools fundamentally change the way students compose, that this acknowledgment means we need to value technological authorship, and that such values must be reflected in our classroom learning outcomes. By articulating learning outcomes that value students’ technological composing processes, we create opportunities to encourage them to use technologies (whether they are “easy” technologies like Power-Point or more complex ones like HTML coding) to create rhetorically-savvy compositions. In the next section, I outline two assessment strategies, digital badges and self-assessment, we can use to credential such learning outcomes.

Strategies for Multimedia Assessment

One of the most promising strategies educators can use to assess students’ technological authorship is embedded assessment. Embedded assessment is a learning model that recognizes the steps an author takes when creating and designing a product. Jessica Klein, Creative Lead of the Mozilla Open Badges project, describes embedded assessment as a process that allows for “tools, learners, and peers to gauge what learners know and how they use that knowledge in action” as well as to gain recognition for technological skills and abilities they develop (n.p.). In the context of composition classrooms, embedded assessment reflects the idea that students should receive credit for the steps or processes underwriting their composing practices and that digital creations reflect numerous moments of learning as well as multiple acts of creation.

Embedded assessment looks to be an ideal system for tracking and acknowledging students’ technological authorship as it echoes the assessment practices already valued within composition studies, which supports the idea that formative, rather than summative, assessment is often most fruitful for students (cf. Paul Black and Dylan Wiliam’s 1998 work Assessment and Classroom Learning). Embedded assessment is similar to formative assessment in that it is built on the premise that a student’s learning process (and not just the final product) should be valued. This form of assessment, however, differs from the traditional notion of formative or process-based assessment, which often materializes in the form of the draft process; the main point of departure stems from the fact that embedded assessment recognizes the technical skills and competencies involved in creating new media projects as well as the steps a composer must take in learning those skills.

A promising tool for putting embedded assessment into action is digital badges, which are symbolic representations of skills, knowledge, and competencies a student has learned. Angela Elkordy, a doctoral candidate at Eastern Michigan University, describes badges this way:

Digital badges are essentially credentials which may be earned by meeting established performance criteria. A digital badge, much like its boy or girl scouts’ cloth counterpart, is an image or symbol representing the acquisition of specific knowledge, skills or competencies. The vision of a digital badging “ecosystem,” that is, a loosely connected framework of badges designed by various authorizers for different purposes, is moving forward to realization. (n.p.)

Digital badges are garnering the attention of educators in a variety of fields and across multiple institutions. Jamie Mahoney, a web developer for the Centre for Educational Research and Development at the University of Lincoln, argues that badges are a valuable tool for assessing student learning outcomes and for data tracking. Daniel Hickey, on his blog Re-mediating Assessment, outlines ways in which he uses digital badges for evaluation in his doctoral course on educational assessment. Purdue University has recently created a pilot program for digital badges called the Passport Badge and Portfolio Platform (http://www.itap.purdue.edu/studio/passport/ ), and the Digital Media and Learning Competition on Badges for Lifelong Learning, supported by the MacArthur and Bill and Melinda Gates Foundations, is exploring the use of badges in and outside the classroom. These are just a few instances in which badges are being used, piloted, or developed. As we look toward the future, digital badges promise to offer educators exciting new opportunities for evaluating student work.

Digital badges are also a promising assessment tool for composition courses. Although they might be integrated into writing courses in a variety of ways, they are especially useful as a means of assessing students’ technological authorship because they “encourage students to demonstrate how they have met very specific learning objectives through actual performance” (Watson n.p.). Students can earn badges as they learn HTML code or CSS, master video editing skills, learn a new program, or create web pages. There are myriad ways in which writing teachers might use digital badges for assessment, but they are particularly appropriate for assessing students’ technological authorship because they have the potential to recognize the steps a writer/designer must take when creating a digital text.

Now that I have explained the role embedded assessment and digital badges can play in the assessment of new media compositions, I want to briefly address a series of questions I imagine readers have asked by now: “How far does one go in giving credit to the technical aspects of a new media production? And what happens if one student spends considerably less time than another on the technological aspects of a project? Do they receive the same grade?” These are, of course, valid concerns. I do not have patent answers for such questions, but I can offer two approaches that begin to address them.

My response to the first concern, regarding the extent to which we credit technical skills, necessarily boils down to “it depends.” Because writing instruction—and therefore assessment—is contextual and reflects localized programmatic and classroom goals, it should be “tailored to the nature and goals of each project” (Hodgson 204). Simply put, the extent to which a teacher wants to recognize or reward the technical aspects of an assignment should reflect his or her intuition, professional judgment, and classroom and programmatic learning outcomes. If a learning outcome for an assignment requires students to learn a new or complex technology, then I would argue that teachers should look to embedded assessment (whether in the form of digital badges or some other method) to assess students’ work. Teachers must also self-determine whether or not they want to use grades to evaluate students’ technological authorship. Some educators might offer participation credit or other non-traditional incentives such as points or badges as a means of recognizing, but not outright grading, the technological skills students learn.

Our assessment practices must also demonstrate fairness. What does a teacher do, for instance, if one student spends more time than another developing technical skills? Do these students, assuming their final products are comparable in substance and quality, receive the same grade? Perhaps the best approach to resolving this problem is self-assessment. Asking students to self-assess their technological composing processes allows them to play a role in determining and valuing what they learn. Moreover, as Crystal VanKooten points out, self-assessment invites students to “reflect on their composition’s effectiveness for a chosen purpose and audience, and to further develop critical literacies” (n.p.). Not only does self-assessment help resolve issues of fairness, but it also democratizes and decentralizes the assessment process. If students take charge of assessing their technological authorship, and if we support and guide them through this process, then we create opportunities where both students and teachers can see the value of technical and textual production.

Closing Thoughts + Assessment Activities

This past summer, I advanced my understanding of how to write HTML code using an online program called Codeacademy. If you aren’t familiar with this resource, it’s a program designed to teach people like me how to “make computers do things.” The coolest thing about the program is that as you learn new skills and tools, you earn badges or points. While such rewards may sound juvenile, anyone who has used this program (or any other rewards-based game) knows how motivating such rewards can be. Day after day I found myself obsessed not only with a desire to learn code, but to also earn those tiny pixilated badges I could tweet about or share with friends.

A resource like Codeacademy has two major advantages that as educators we need harness: it is inherently motivating, and it recognizes technological authorship. The revised model for assessment I’ve put forth here begins to move us in such a direction. This model offers not only learning outcomes and assessment strategies we can use in our classrooms but, more importantly, a revised understanding of students’ technological authoring processes. This awareness can, in turn, lead to teaching practices that encourage students to create more dynamic, rhetorically-aware, and (to borrow Reilly and Atkins term) “aspirational” compositions.

In closing, I bring the theoretical model I offer here to fruition by offering several activities teachers might use to assess students’ technological authorship. While these activities are similar to those many of us already use in our classrooms to assess process-based writing, they differ in that the particular focus of these exercises is to help students articulate the technological skills and competencies they have learned and to help educators assess these skills. I encourage readers to modify these exercises in ways that are most appropriate to their classroom goals and needs.

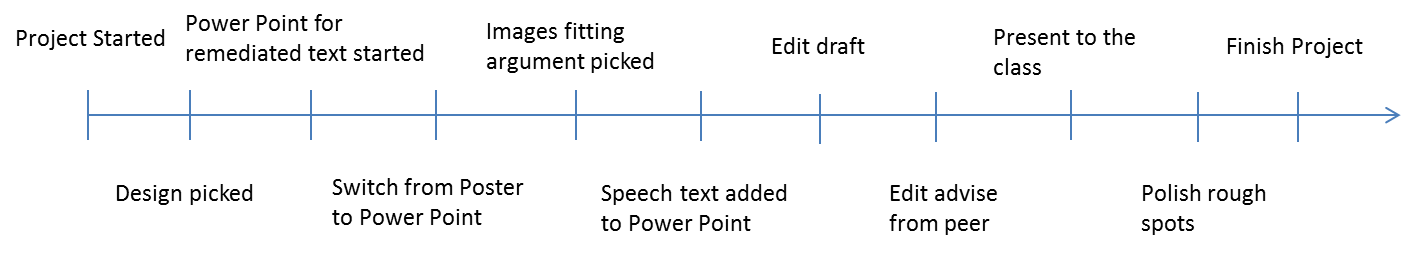

Assessment Activity #1: Statement of Goals & Choices + Process-Based Timeline

This activity asks students to create a timeline that represents the activities they engage in while creating a digital text. The timeline encourages students to create broad yet detailed portraits of what they have or have not accomplished and to determine which aspects of their drafting process they believe are worth assessing. It also gives students a chance to generate a spatial and temporal representation of their composing activities.

It is ideal to ask students to create the timeline in conjunction with their projects so they do not forget key composing moments, but it can also be assigned successfully toward the end of a project. For an example of what type of project this activity might be used for, click here (http://www.loribethdehertogh.com/101/Spring13/wp-content/uploads/2012/11/101_Remediated-Argue..pdf ). Below are descriptions and examples of this exercise.

Activity

Prior to submitting their final assignments, ask students to create a digital or print-based timeline that reflects their learning and composing processes. Activities students might add to the timeline include coding, design, research, writing, peer review, and revising. The timeline should not record every single activity students engage in, but rather capture major moments in their composing processes.

After completing the timeline, ask students to draft what Jody Shipka calls a Statement of Goals and Choices (or SOGC) in which they explain the “rhetorical, technological, and methodological choices” that influenced their composing process (113). It is useful if students are provided with leading questions that have been tailored to the assignment as well as learning outcomes. As students draft their SOGC, they should explain not only the rhetorical choices they made in crafting their projects, but also explore how they chose to spatially and temporally represent those activities on their timelines.

Example

Below are two student examples of both a Statement of Goals and Choices and a process-based timeline.

Student Example #1

Statement of Goals & Choices

- The digital argument/text that I am trying to accomplish is to persuade the audience to change the legal driving age from sixteen years old to eighteen years old. I am stating the difference between a young driver at age sixteen and a more developed driver at the age of eighteen and up.

- I chose to use a Google chrome power-point to show visuals in a form of a T-graph. In the power-point the heading states a situation a driver may experience and on the left side is what the sixteen year old driver would do and on the right side of the slide would be the reaction of an older experienced driver. This way it is easier to witness the same situation and how the two drivers would react to the given situation. There weren’t many limitations besides showing an actual filmed video of the different scenarios to really make the audience emotional. Choosing to make a slideshow with visuals and descriptions was the best way to present the remediated persuasive essay.

- As I remediated this project, my argument did not change. In all honesty, remixing this essay into a digital form boosted up my argument by being able to see pictures and reading captions.

- I learned that taking a written essay and remixing it into a remediated digital argument can give the audience and the writer a different perspective. While making this new project and after presenting to a few people I realized that I became more persuaded by the argument.

Timeline

- Brain storm how I want to present project (2 days)

- Choose to make a video; Start outline to make video project

- Ask friends to be a part of the video and make outline (10 minutes)

- Decided it was too hard to do

- Changed idea to make a power-point slide show

- Download Google Chrome (30 minutes)

- Power-point on google chrome

- Come up with scenarios for drivers (2 hours)

- Find pictures (30 minutes)

- Start to create power-point

- Add photos to the slides

- Add descriptions (captions) to the pictures

- Finish (4 days)

Student Example #2

Statement of Goals & Choices

This digital remediation of the paper was made to stimulate an emotional response from the audience by showing them pictures of the present (which is pollution and depletion of our current fossil fuels) and what the future could look like with solar energy. I tried to use a lot of resources and facts to further prove my point and create the logos affect. Finally, I built up my credibility by using sources created by leading experts in their fields that were able to explain topics well. I tried to make this new remediated project for a less educated crowd and our future generations. If they didn’t know much about solar energy, I am able to explain it to them by using this power point.

I chose to do a poster, but after I bought the poster I figured every time I had to edit the power point I would have to rip everything off and redo it after every edit, so I went with a program more familiar to me. I used a power point because I had a bunch of knowledge about it and I have been using it since elementary school. Some limitations constrained how creative I can be by restricting my animations and videos I can add which makes it hard to give an engaging presentation.

My argument started to shift focus when I started using pictures and diagrams. I had to explain their meanings and why I added them to my presentation which changed my focus from future engineers to the general public. In my essay, I talked about how future engineers should consider a career in solar energy and in my remediated project it was all about getting everyone into solar energy.

In this project, I learned how changing the project presentation style could completely change your audience and even the message of your argument. You can use this to your advantage when presenting to a younger generation by making it more engaging or more professional for a more business-like presentation. I can transfer this new knowledge to meetings and future presentations that I give. I can make my work more engaging and interesting by learning how to remediate it.

Figure 1. Example of a student’s process-based timeline.

Assessment Rationale

Unlike most assessment strategies, which provide teachers with limited knowledge of the steps students go through when completing an activity, the SOGC and timeline offer a comprehensive view of key learning moments. They also:

- Invite students to see spatial and temporal relationships between their composing processes;

- Encourage students to identify and explain the rhetorical and technological choices they made in designing their projects;

- Provide instructors with multiple layers for assessment.

Assessment Activity #2: Double-Entry Notes

This activity asks students to use a double-note entry method for recording and reflecting on their digital composing processes. I find this activity useful because it allows students to chronicle their activities and to observe sometimes overlooked connections between process and product. It is best to introduce students to this activity and explain its purpose prior to the start of their projects. Below is a description of the activity.

Activity

Ask students to create either a print or electronic double-note entry system prior to beginning their projects. As students create and revise their compositions, they should write on the left-hand side of the page/screen all of the activities, research, coding, designing, etc. they engage in. Entries may or may not be in chronological order. Emphasize to students that even minor activities should be recorded. Also highlight that this part of the note-taking process should focus solely on creating a record of their composing activities, including technological skills and literacies they develop.

Once students’ projects are complete, they should fill in the right hand side of the entry. Unlike the first part of the note-taking process, this activity asks students to reflect on the information they recorded. Encourage students to use this space to reflect on literacies developed, moments of frustration or elation, problem-solving methods, peer review workshops, etc. that were previously documented. This side of the double-note entry should be significantly longer than the left-hand side. Once students have completed their double-note entries, collect them along with any other materials submitted with the final project.

Example

Below is a brief example of what a student’s double-note entry might look like if he/she were to create a digital poster. Note that students’ entries will likely be longer and more detailed.

| Activity | Reflection |

|---|---|

| Used Adobe InDesign |

I have some experience with InDesign, so I decided to use this program to create my poster. To design my poster, I used various InDesign tools (such as frames, textboxes, color palates) as well as the program’s layering features. Each of these tools helped me create a polished and professional-looking product. The one tool that I really struggled with was the layering feature, but now that I’ve created my poster, I think I have the hang of it. |

| Learned basic HTML code |

I used several online tutorials such as Codeacademy and YouTube videos to learn how to write basic HTML code to create a website for my poster. It took me about 5 hours to watch the videos and practice writing code. There were lots of mess-ups along the way. After a while, I realized I didn’t want to create a website for the poster because it was too difficult. |

| Revised the project |

Revision was by far the most time consuming part of my composing process because I had to constantly play around with different InDesign features to figure out how to get my poster to look the way I wanted it to. The program also kept messing up, so sometimes it felt like it took twice as long as it should have to get the work done. Overall, my revisions to the poster probably took about 4-6 hours. |

Assessment Rationale

Student entries might be used to:

- Determine skills and literacies learned by students during the composing process;

- Create a body of information that can be used to compare and contrast various learning moments;

- Observe students’ learning processes over an extended period;

- Connect process and product to key learning outcomes.

Assessment Activity #3: Digital Badges

Digital badges are an excellent way for students to receive acknowledgment for the technological literacies they develop while composing digital texts. The program teachers use to create badges may vary, but what should remain consistent is that badges should reflect specific learning outcomes. Badges can be used for both individual and collaborative projects. Below is an example of how digital badges might be used.

Activity

Before beginning a digital project, talk to students about the rationale behind using an alternative assessment method like digital badges. It is important that students understand that badges are not simply stand-ins for grades, but tools that can help them demonstrate skills and competencies they have acquired. It may be helpful to compare the process of earning badges to video games where a player earns points, equipment, or power bars as he or she gains new skills. Teachers might also invite students to play a role in establishing badge criteria and in making design decisions.

After establishing an assessment rationale and developing criteria, digital badges can be used to recognize a range of digital composing processes including, but not limited to: learning HTML code and CSS, developing web design skills, creating information graphics, mastering online programs, or learning new software. Teachers should tailor badges to match their particular course learning outcomes and student needs.

Examples

Below are examples of several digital badges I have created using Purdue University’s Passport program. These badges represent some of the technological skills I value in students’ work. To learn more about digital badges, I recommend visiting this curated collection (https://www.hastac.org/collections/digital-badges ) from The Humanities, Arts, Science, and Technology Alliance and Collaboratory (HASTAC).

Badge #1: Students earn this badge for learning how to use various technological tools available at Washington State University’s Avery Microcomputer Lab.

Badge #2: Students receive this badge for revising digital compositions.

Badge #3: Students earn this badge for tweeting about technological skills, problems, successes, or concerns they have while composing a digital text.

Assessment Rationale

Digital badges are a relatively new assessment technology in Higher Education, but they have the potential to:

- Democratize the assessment process;

- Create opportunities for students to share badges with peers;

- Provide formative and summative feedback;

- Disrupt traditional grading and credentialing practices;

- Mimic assessment practices students find in online systems such as social networking sites and video games;

- Create opportunities for student/teacher partnerships in establishing assessment criteria and designing badges.

We know in the twenty-first century that the way students write and the products they produce are changing. The activities described above give us practical and responsible ways for responding to these changes and for recognizing the value of students’ technological authorship. Just as importantly, they address the question that as educators we often ask ourselves when we receive students’ multimedia projects for assessment: “How do I go about giving this student meaningful feedback as well as a fair grade?” These activities, as well as the revised assessment model I articulate here, can help us begin to respond to this question.

Thanks

Many thanks to the Editorial Board at Composition Forum and three anonymous reviewers whose insights helped me improve substantially an earlier version of this text. Special thanks to Kristin Arola, Diane Kelly-Riley, and Mike Edwards, whose insights on early drafts were invaluable.

Works Cited

Adkison, Stephen and Stephen Tchudi. Grading on Merit and Achievement: Where Quality Meets Quantity. Alternatives to Grading Student Writing. Ed. Stephen Tchudi. Urbana, IL: National Council of Teachers of English, 2011. 192-208. Web. 5 Sept. 2013.

Angelo, Thomas and Patricia Cross. Classroom Assessment Techniques: A Handbook for College Teachers. 2nd ed. San Francisco: Jossey-Bass Publishers, 1993. Print.

Baron, Dennis. From Pencils to Pixels: The Stages of Literacy Technologies Passions, Pedagogies, and 21st Century Technologies. Eds. Gail Hawisher and Cynthia Selfe. Logan: Utah State UP, 1999. 15-33. Print.

Bencich, Carole Beeghly. Response: A Promising Beginning for Learning to Grade Student Writing Alternatives to Grading Student Writing. Ed. Stephen Tchudi. Urbana, IL: National Council of Teachers of English, 2011. 47-61. Web. 5 Sept. 2013.

Black, Paul, and Dylan Wiliam. Assessment and Classroom Learning. Assessment in Education 5.1 (1998): 7-74. Print.

CCCC Position Statement on Teaching, Learning, and Assessing Writing in Digital Environments. Feb. 2004. Web. 2 Oct. 2014.

Eidman-Aadahl, Elyse et al. Developing Domains for Multimodal Writing Assessment: The Language of Evaluation, the Language of Instruction. Digital Writing Assessment and Evaluation. Eds. Heidi McKee and Dànielle Nicole DeVoss. Logan, UT: Computers and Composition Digital Press/Utah State University Press, 2013. n.p. Web. 12 Aug. 2013.

Elkordy, Angela. The Future Is Now: Unpacking Digital Badging and Micro-credentialing for K-20 Educators. 24 Oct. 2012. Web.15 Nov. 2012. <http://www.hastac.org/blogs/elkorda>.

Gunther, Kress and van Leeuwen, Theo. Multimodal Discourse: The Modes and Media of Contemporary Communication. New York: Oxford UP, 2001. Print.

Hickey, Daniel. Incorporating Open Badges Into a Hybrid Course Context. 3 Oct. 2012. Web. 1 Nov. 2012. <http://remediatingassessment.blogspot.com>.

Hodgson, Kevin. Digital Picture Books: From Flatland to Multimedia. Teaching The New Writing. Eds. Anne Herrington, Kevin Hodgson, and Charles Moran. New York: Teachers College Press, 2009. 55-72. Print.

Klein, Jessica. Badges, Assessment and Webmaker+. 9 Aug. 2012. Web. 3 Sept. 2012. <http://jessicaklein.blogspot.com>.

Lauer, Claire. Contending with Terms: Multimodal and Multimedia in the Academic and Public Spheres. Computers and Composition 26 (2009): 225-39. Print.

Mahoney, Jamie. Designing a Badge System for Universities. 25 Apr. 2012. Web. 5 Oct. 2012. <http://coursedata.blogs.lincoln.ac.uk>.

Neal, Michael. Writing Assessment and the Revolution in Digital Texts and Technologies. New York: Teachers College Press, 2011. Print.

Penrod, Diane. Composition in Convergence: The Impact of New Media on Writing Assessment. Mahwah, NJ: Lawrence Erlbaum Associates, 2005. Print.

Reilly, Colleen and Anthony Atkins. Rewarding Risk: Designing Aspirational Assessment Processes for Digital Writing Projects. Digital Writing Assessment and Evaluation. Eds. Heidi McKee and Dànielle Nicole DeVoss. Logan, UT: Computers and Composition Digital Press/Utah State University Press, 2013. n.p. Web. 15 Oct. 2013.

Selfe, Cynthia. Students Who Teach Us: A Case Study of a New Media Text Designer. Writing New Media: Theory and Applications for Expanding the Teaching of Composition. Logan: Utah State UP, 2004. 43-66. Print.

Sheppard, Jennifer. The Rhetorical Work of Multimedia Production Practices: It’s More than Just Technical Skill. Computers and Composition 26 (2009): 122–131. Print.

Shipka, Jody. Toward a Composition Made Whole. Pittsburgh: U of Pittsburgh P, 2011. Print.

Sorapure, Madeleine. Between Modes: Assessing Student New Media Compositions. Kairos: A Journal of Rhetoric, Technology, and Pedagogy 10.2 (2006): 1-15. Web.

Takayoshi, Pamela. The Shape of Electronic Writing: Evaluating and Assessing Computer-Assisted Writing Processes and Products. Computers and Composition 13 (1996): 245-51. Print.

VanKooten, Crystal. Toward a Rhetorically Sensitive Assessment Model for New Media Composition. Digital Writing Assessment and Evaluation. Eds. Heidi McKee and Dànielle Nicole DeVoss. Logan, UT: Computers and Composition Digital Press/Utah State University Press, 2013. n.p. Web. 27 Oct. 2013.

Watson, Bill. Digital Badges Show Students’ Skills Along with Degree. Purdue News. 11 Sept. 2012. Web. 5 May 2013. <http://www.purdue.edu/newsroom/releases/2012/Q3/digital-badges-show-students-skills-along-with-degree.html>.

Wiggins, Grant and Jay McTighe. Understanding by Design. Alexandria, VA: Association for Supervision and Curriculum Development, 1998. Print.

Wysocki, Anne Frances, et al. Writing New Media: Theory and Applications for Expanding the Teaching of Composition. Logan: Utah State UP, 2004. Print.

Yancey, Kathleen. Looking for Sources of Coherence in a Fragmented World: Notes Toward a New Assessment Design. Computers and Composition 21 (2004): 89-102. Print.

Toward a Revised Assessment Model from Composition Forum 30 (Fall 2014)

Online at: http://compositionforum.com/issue/30/revised-assessment-model.php

© Copyright 2014 Lori Beth De Hertogh.

Licensed under a Creative Commons Attribution-Share Alike License.

Return to Composition Forum 30 table of contents.